[0.0.0] Wavy Grains - GPU granular synthesis

A few months back, after hearing about SYNTH Jam, an idea wormed its way deep into my brain. The result of my hubris became this synthesizer project: Synulator (Synthesized Granulated Oscillator).

I created a synthesizer in Unity for a previous project, but ran into limitations modeling timbres in real-time (aka "voices," why a piano doesn't sound like a trumpet). The instrument could generate in-phase sine waves, independent of frequency and time. But modeling too many harmonic frequencies overwhelmed the capabilities of my CPU, limiting the timbres I could construct. Procedural audio in Unity is requested at a fixed buffer size whenever the engine needs to play sound. When the calculations took too long, the beautiful string instrument harmonics were transformed into stuttering, painful sounds.

This made me wonder if other synthesizers used the GPU to parallelize the process, and I stumbled right into the idea of granular synthesis. Typically, this technique splits source audio into very short "grains," played nearly simultaneously. Adjusting the timing and playback parameters can create new frequencies and sonic textures that were never present in the original sound.

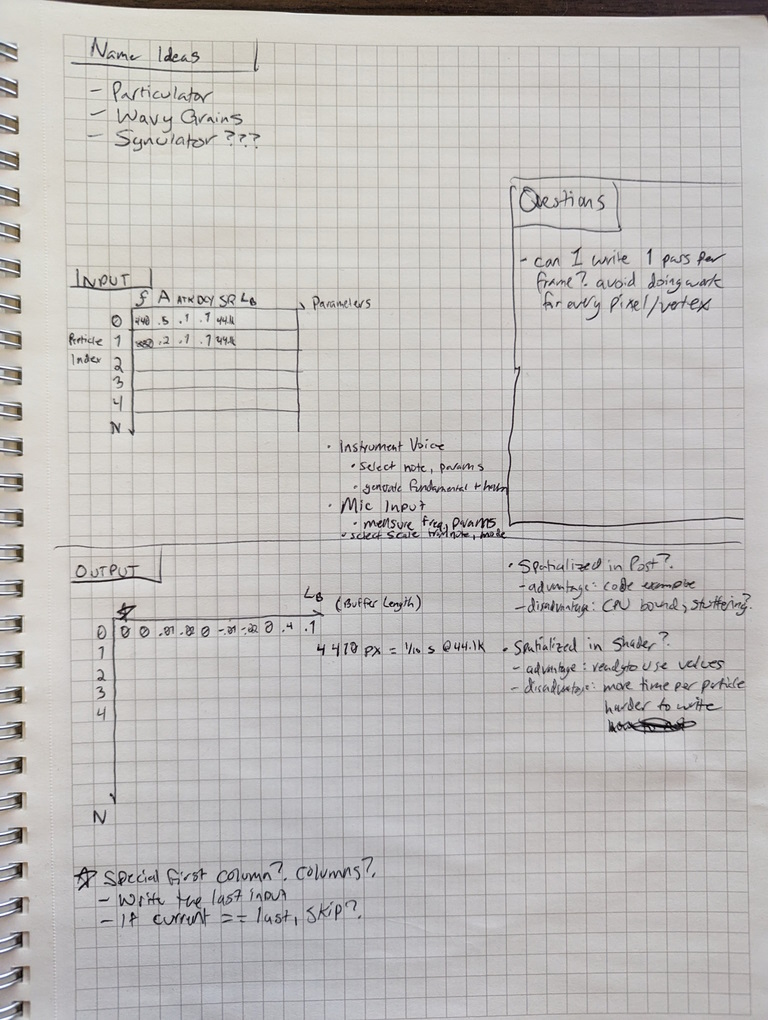

The concept of a GPU-driven granular synthesizer began to solidify, and I started researching my options. I didn't know exactly how, or if, I could build one, but found just enough information to categorize it as, "probably not impossible." I had very limited experience with Unity's HLSL shader code, or any custom shaders at all, but I started scribbling my initial thoughts on paper (notebook page at the end).

Particles could be used as both visual markers and points in 3D space for spatialization. Then, each particle could calculate one grain of audio. But because of the way shaders actually work, I slightly altered the plan. Grains could be calculated like a sprite map: one 2D texture as Output, containing the audio for every grain.

Each row of pixels would represent one grain of mono audio, unspatialized, over a short length of time. The number of simultaneous grains would be limited by the height of the Output texture. The maximum length of time represented by a single "pass" (one image) would be limited by the width.

I didn't know what capacity would work, but each pass would calculate the wave value from Sample 0 to Sample 1023 or 2047 or 4095. Graphics cards are pretty good at calculating pixel colors in parallel, but the big challenge would be transferring the result from GPU land to CPU land.

With a vague plan in place, and no idea if I had the technical ability, I hacked apart Unity components. I created a project using the 3D FPS template, providing a basic environment to construct everything for almost zero effort. I quickly realized (yet again), shaders actually work differently than I had assumed. Another 2D texture was required for the Input; the Output shader must read each of the Input pixels at the current Y position, then calculate the wave value at the point in time (or Sample Index) represented by X.

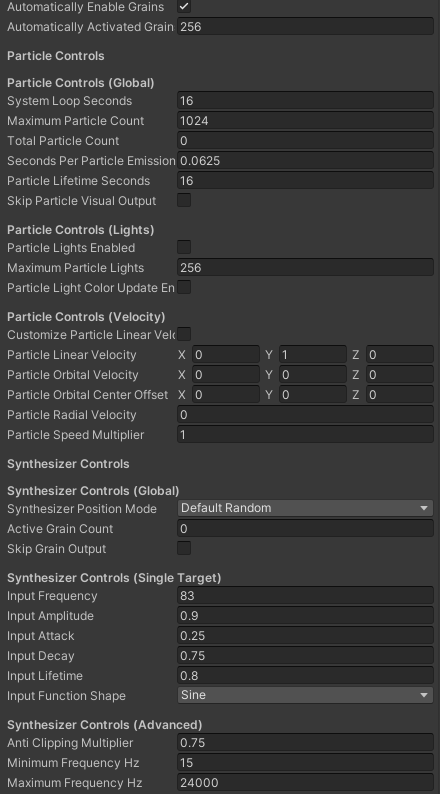

The current setup uses an Input texture, 8 pixels (width) by 1024 pixels (height); and an Output texture, 2048 pixels by 1024 pixels. Here's a rundown of the pipeline, which (pretty much) happens every frame:

- Parameters are set per grain in RAM: Frequency, Amplitude, Attack, Decay, Lifetime, Waveform Shape

- Particle positions are measured or fixed in place, pre-calculating Left/Right and Distance attenuation constants

- Input texture is written with pixels, calculated by converting a float (range 0 to 1) to a Color

- Output texture is calculated by requesting one pass at a particular resolution, using the special Grain Output shader

- This was very painful, since shaders aren't easily debugged

- This open source project unblocked initial performance issues; GPU requests are queued and retrieved later

- Colors are copied from the output texture pixels and converted to an array of floats (from -1 to 1)

- Each grain's array is converted from mono to stereo, with basic spatialization applied using the associated particle's position

- Each stereo array is summed together with anti-clipping parameters, then added to the queue of ready-to-copy buffers

- Whenever the next chunk of audio is required, a spatialized stereo buffer is popped off the queue and used

With some fine tuning, performance improvements, and plenty of frustration, I had a working result! All of the Input parameters were exposed as public properties, allowing me to modify them at runtime and hear the synthesizer react in different ways. It even ran smoothly on my Steam Deck!

After the pipeline became functional, I spent time adding visual and audio settings. It's a synthesizer, so I needed on/off toggles for expensive visuals and limiters for GPU audio calculations. I also limited the default number of automatically activated grains (256) and added a basic "Single Target" section for testing Input parameters. Another screenshot below has a preview of the 0.0.0 version's parameters!

It was definitely capable of creating interesting sounds, but at this point it desperately needed a basic UI. The 0.0.1 version and more are coming soon, which also include a critical feature: closing the executable. Thanks for stopping in!

Files

Get Synulator

Synulator

Real-time granular synthesizer with GPU audio processing

| Status | Prototype |

| Category | Tool |

| Author | strati.farm |

| Tags | Audio, Experimental, granular-synthesis, Instrument, Music, Music Production, Sound effects, Soundtoy, synthesizer, Synthwave |

| Accessibility | High-contrast, Blind friendly |

More posts

- [0.0.4] Post-jam improvements, and a previewMar 13, 2024

- [0.0.3] New keyboard display and many fixes!Jan 15, 2024

- [0.0.2] Hotfix for performance and controlsJan 09, 2024

- [0.0.1] First playable release!Jan 09, 2024

Leave a comment

Log in with itch.io to leave a comment.